Simulates draws from the predictive density of the returns and the latent log-volatility process. The same mean model is used for prediction as was used for fitting, which is either a) no mean parameter, b) constant mean, c) AR(k) structure, or d) general Bayesian regression. In the last case, new regressors need to be provided for prediction.

# S3 method for class 'svdraws'

predict(object, steps = 1L, newdata = NULL, ...)Arguments

- object

svdrawsorsvldrawsobject.- steps

optional single number, coercible to integer. Denotes the number of steps to forecast.

- newdata

only in case d) of the description corresponds to input parameter

designmatrixinsvsample. A matrix of regressors with number of rows equal to parametersteps.- ...

currently ignored.

Value

Returns an object of class svpredict, a list containing

three elements:

- vol

mcmc.listobject of simulations from the predictive density of the standard deviationssd_(n+1),...,sd_(n+steps)- h

mcmc.listobject of simulations from the predictive density ofh_(n+1),...,h_(n+steps)- y

mcmc.listobject of simulations from the predictive density ofy_(n+1),...,y_(n+steps)

Note

You can use the resulting object within plot.svdraws (see example below), or use

the list items in the usual coda methods for mcmc objects to

print, plot, or summarize the predictions.

See also

Examples

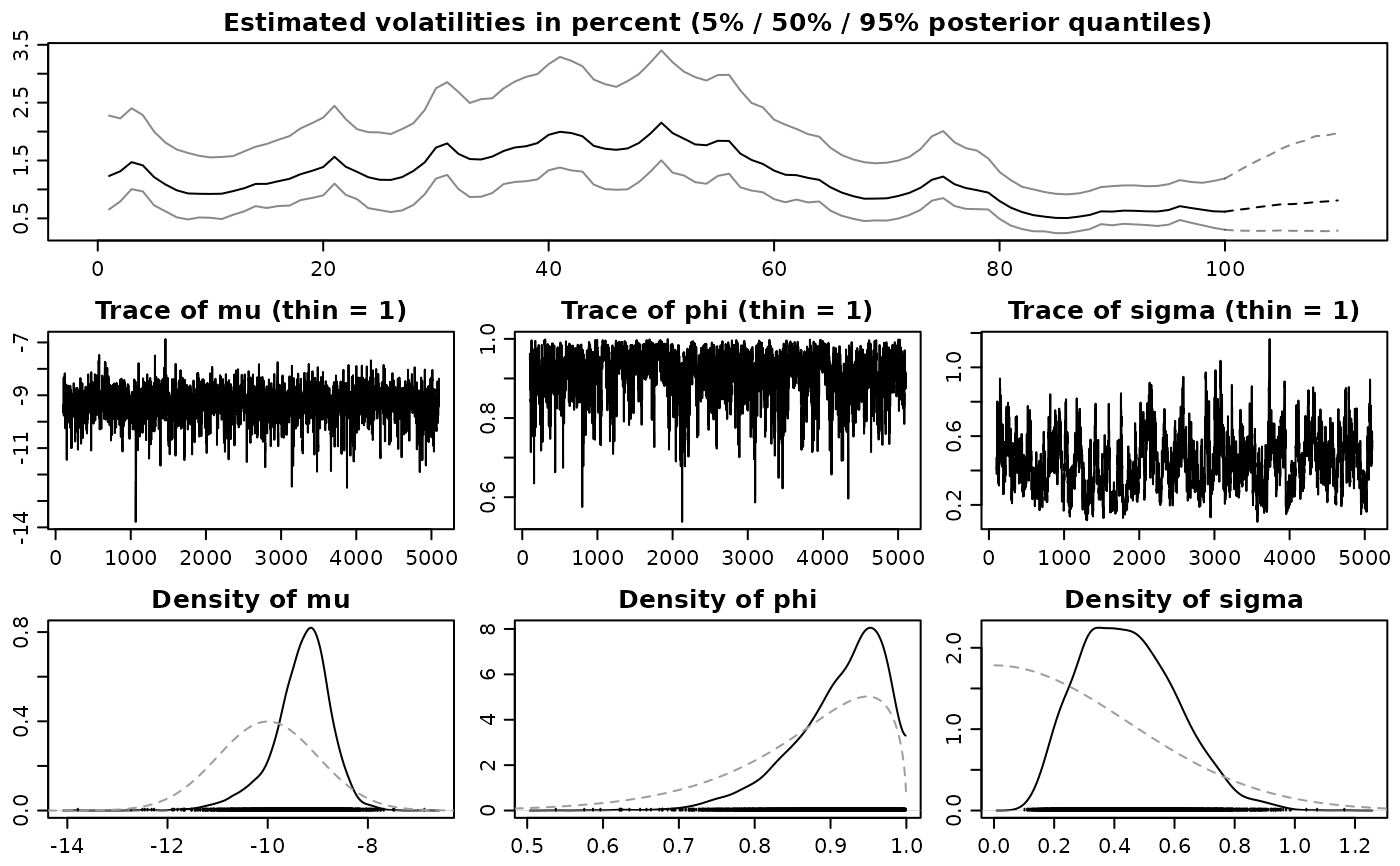

# Example 1

## Simulate a short and highly persistent SV process

sim <- svsim(100, mu = -10, phi = 0.99, sigma = 0.2)

## Obtain 5000 draws from the sampler (that's not a lot)

draws <- svsample(sim$y, draws = 5000, burnin = 100,

priormu = c(-10, 1), priorphi = c(20, 1.5), priorsigma = 0.2)

#> Done!

#> Summarizing posterior draws...

## Predict 10 days ahead

fore <- predict(draws, 10)

## Check out the results

summary(predlatent(fore))

#>

#> Iterations = 1:5000

#> Thinning interval = 1

#> Number of chains = 1

#> Sample size per chain = 5000

#>

#> 1. Empirical mean and standard deviation for each variable,

#> plus standard error of the mean:

#>

#> Mean SD Naive SE Time-series SE

#> h_101 -8.704 0.5897 0.008340 0.01557

#> h_102 -8.706 0.6374 0.009014 0.01488

#> h_103 -8.693 0.6636 0.009385 0.01474

#> h_104 -8.686 0.6925 0.009794 0.01451

#> h_105 -8.677 0.7071 0.009999 0.01440

#> h_106 -8.671 0.7292 0.010313 0.01525

#> h_107 -8.676 0.7510 0.010621 0.01520

#> h_108 -8.680 0.7555 0.010685 0.01632

#> h_109 -8.679 0.7688 0.010872 0.01703

#> h_110 -8.679 0.7733 0.010936 0.01641

#>

#> 2. Quantiles for each variable:

#>

#> 2.5% 25% 50% 75% 97.5%

#> h_101 -9.886 -9.072 -8.682 -8.329 -7.534

#> h_102 -10.061 -9.094 -8.681 -8.301 -7.470

#> h_103 -10.096 -9.089 -8.659 -8.282 -7.423

#> h_104 -10.140 -9.100 -8.647 -8.247 -7.406

#> h_105 -10.183 -9.096 -8.640 -8.240 -7.322

#> h_106 -10.175 -9.099 -8.636 -8.227 -7.244

#> h_107 -10.227 -9.133 -8.614 -8.209 -7.213

#> h_108 -10.278 -9.123 -8.619 -8.208 -7.265

#> h_109 -10.371 -9.122 -8.620 -8.210 -7.211

#> h_110 -10.358 -9.129 -8.616 -8.206 -7.202

#>

summary(predy(fore))

#>

#> Iterations = 1:5000

#> Thinning interval = 1

#> Number of chains = 1

#> Sample size per chain = 5000

#>

#> 1. Empirical mean and standard deviation for each variable,

#> plus standard error of the mean:

#>

#> Mean SD Naive SE Time-series SE

#> y_101 4.308e-05 0.01412 0.0001997 0.0001997

#> y_102 -2.082e-04 0.01415 0.0002001 0.0002112

#> y_103 -2.343e-04 0.01499 0.0002120 0.0002138

#> y_104 2.270e-04 0.01465 0.0002072 0.0002072

#> y_105 5.226e-04 0.01486 0.0002102 0.0002102

#> y_106 2.889e-04 0.01476 0.0002087 0.0002087

#> y_107 5.427e-06 0.01498 0.0002118 0.0002076

#> y_108 1.677e-04 0.01518 0.0002147 0.0002147

#> y_109 2.105e-04 0.01504 0.0002127 0.0002127

#> y_110 4.398e-04 0.01496 0.0002116 0.0001990

#>

#> 2. Quantiles for each variable:

#>

#> 2.5% 25% 50% 75% 97.5%

#> y_101 -0.02860 -0.008340 1.822e-04 0.008756 0.02677

#> y_102 -0.02868 -0.008709 -8.904e-05 0.008227 0.02810

#> y_103 -0.02960 -0.008652 -2.456e-04 0.008494 0.02822

#> y_104 -0.02920 -0.008210 3.497e-04 0.008860 0.02982

#> y_105 -0.02813 -0.008138 5.901e-04 0.009010 0.03101

#> y_106 -0.02940 -0.008443 3.472e-04 0.008827 0.03011

#> y_107 -0.03089 -0.008642 2.985e-04 0.008614 0.03004

#> y_108 -0.03051 -0.008451 2.008e-04 0.008611 0.03029

#> y_109 -0.02922 -0.008442 -1.698e-05 0.008479 0.03084

#> y_110 -0.02944 -0.008413 2.636e-04 0.008825 0.03177

#>

plot(draws, forecast = fore)

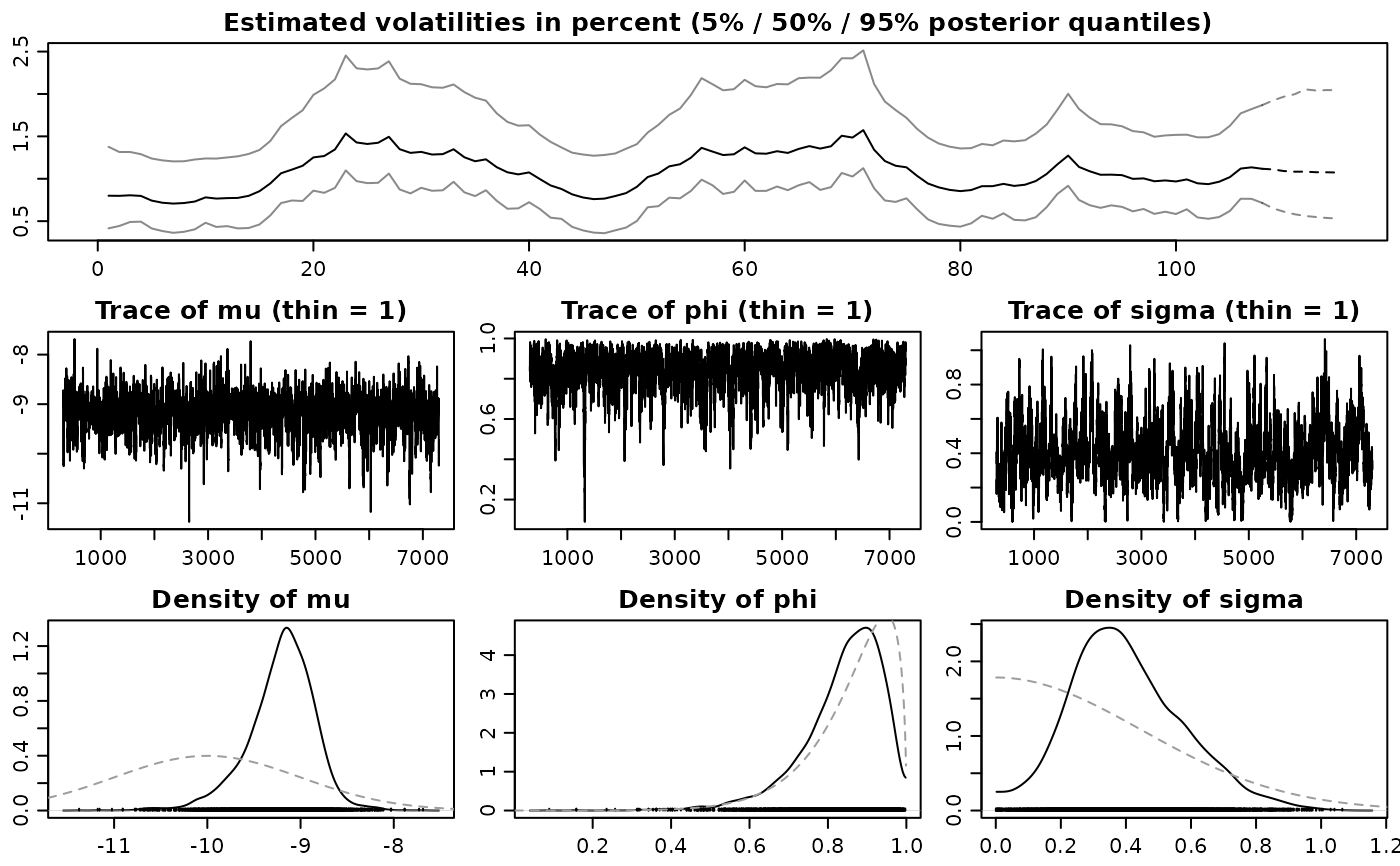

# Example 2

## Simulate now an SV process with an AR(1) mean structure

len <- 109L

simar <- svsim(len, phi = 0.93, sigma = 0.15, mu = -9)

for (i in 2:len) {

simar$y[i] <- 0.1 - 0.7 * simar$y[i-1] + simar$vol[i] * rnorm(1)

}

## Obtain 7000 draws

drawsar <- svsample(simar$y, draws = 7000, burnin = 300,

designmatrix = "ar1", priormu = c(-10, 1), priorphi = c(20, 1.5),

priorsigma = 0.2)

#> Done!

#> Summarizing posterior draws...

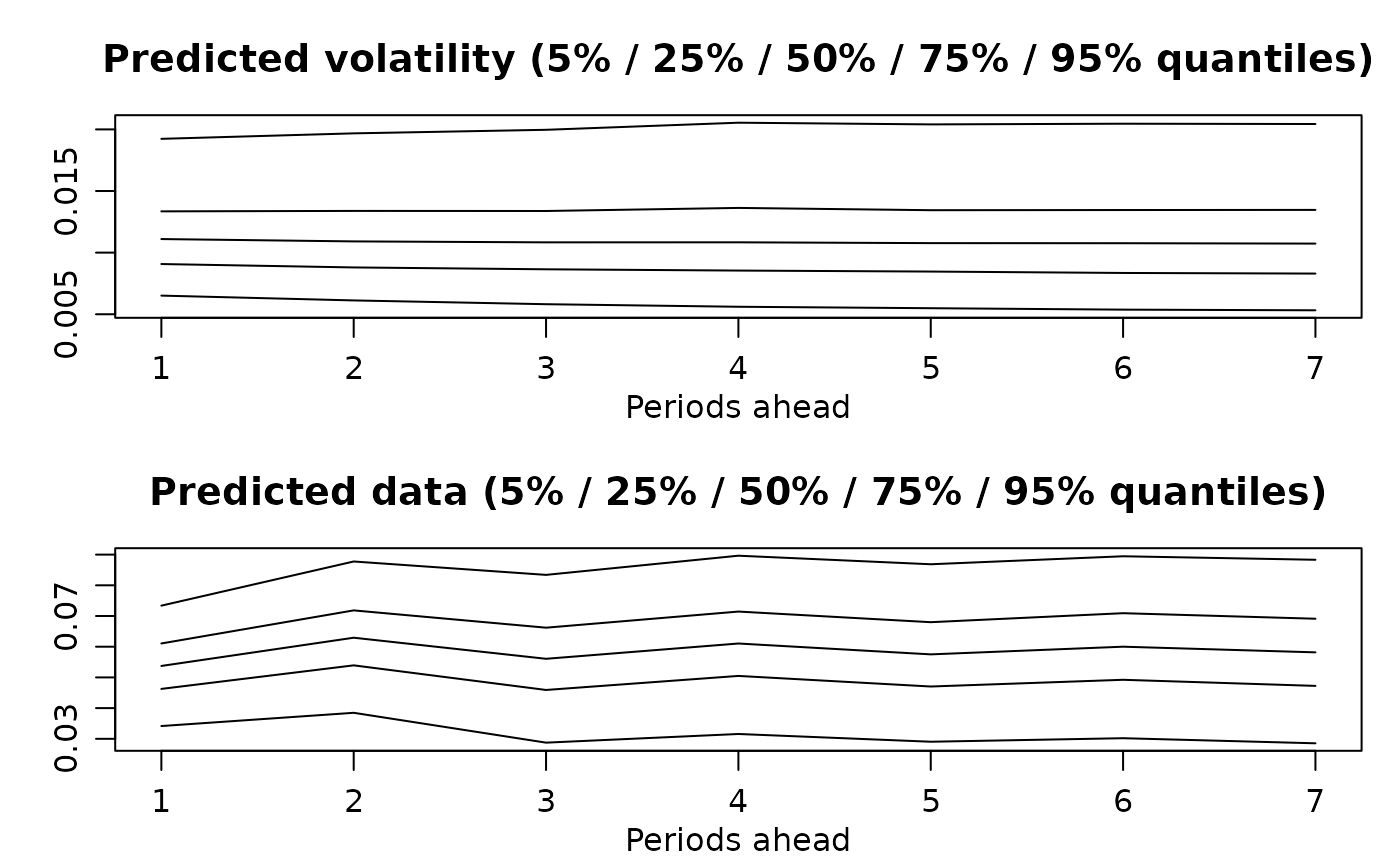

## Predict 7 days ahead (using AR(1) mean for the returns)

forear <- predict(drawsar, 7)

## Check out the results

plot(forear)

# Example 2

## Simulate now an SV process with an AR(1) mean structure

len <- 109L

simar <- svsim(len, phi = 0.93, sigma = 0.15, mu = -9)

for (i in 2:len) {

simar$y[i] <- 0.1 - 0.7 * simar$y[i-1] + simar$vol[i] * rnorm(1)

}

## Obtain 7000 draws

drawsar <- svsample(simar$y, draws = 7000, burnin = 300,

designmatrix = "ar1", priormu = c(-10, 1), priorphi = c(20, 1.5),

priorsigma = 0.2)

#> Done!

#> Summarizing posterior draws...

## Predict 7 days ahead (using AR(1) mean for the returns)

forear <- predict(drawsar, 7)

## Check out the results

plot(forear)

plot(drawsar, forecast = forear)

plot(drawsar, forecast = forear)

if (FALSE) { # \dontrun{

# Example 3

## Simulate now an SV process with leverage and with non-zero mean

len <- 96L

regressors <- cbind(rep_len(1, len), rgamma(len, 0.5, 0.25))

betas <- rbind(-1.1, 2)

simreg <- svsim(len, rho = -0.42)

simreg$y <- simreg$y + as.numeric(regressors %*% betas)

## Obtain 12000 draws

drawsreg <- svsample(simreg$y, draws = 12000, burnin = 3000,

designmatrix = regressors, priormu = c(-10, 1), priorphi = c(20, 1.5),

priorsigma = 0.2, priorrho = c(4, 4))

## Predict 5 days ahead using new regressors

predlen <- 5L

predregressors <- cbind(rep_len(1, predlen), rgamma(predlen, 0.5, 0.25))

forereg <- predict(drawsreg, predlen, predregressors)

## Check out the results

summary(predlatent(forereg))

summary(predy(forereg))

plot(forereg)

plot(drawsreg, forecast = forereg)

} # }

if (FALSE) { # \dontrun{

# Example 3

## Simulate now an SV process with leverage and with non-zero mean

len <- 96L

regressors <- cbind(rep_len(1, len), rgamma(len, 0.5, 0.25))

betas <- rbind(-1.1, 2)

simreg <- svsim(len, rho = -0.42)

simreg$y <- simreg$y + as.numeric(regressors %*% betas)

## Obtain 12000 draws

drawsreg <- svsample(simreg$y, draws = 12000, burnin = 3000,

designmatrix = regressors, priormu = c(-10, 1), priorphi = c(20, 1.5),

priorsigma = 0.2, priorrho = c(4, 4))

## Predict 5 days ahead using new regressors

predlen <- 5L

predregressors <- cbind(rep_len(1, predlen), rgamma(predlen, 0.5, 0.25))

forereg <- predict(drawsreg, predlen, predregressors)

## Check out the results

summary(predlatent(forereg))

summary(predy(forereg))

plot(forereg)

plot(drawsreg, forecast = forereg)

} # }